In the rapidly evolving landscape of AI cybercrime, a new threat has emerged that’s causing security experts to lose sleep. Welcome to the shadowy world of “Malla” – where artificial intelligence takes a sinister turn, and language models become weapons in the hands of cybercriminals.

The rise of AI cybercrime represents a paradigm shift in the digital underworld. As cybercriminals harness the power of language models, they’re creating a new class of threats that are more sophisticated, scalable, and harder to detect than ever before.

What in the World is a Malla?

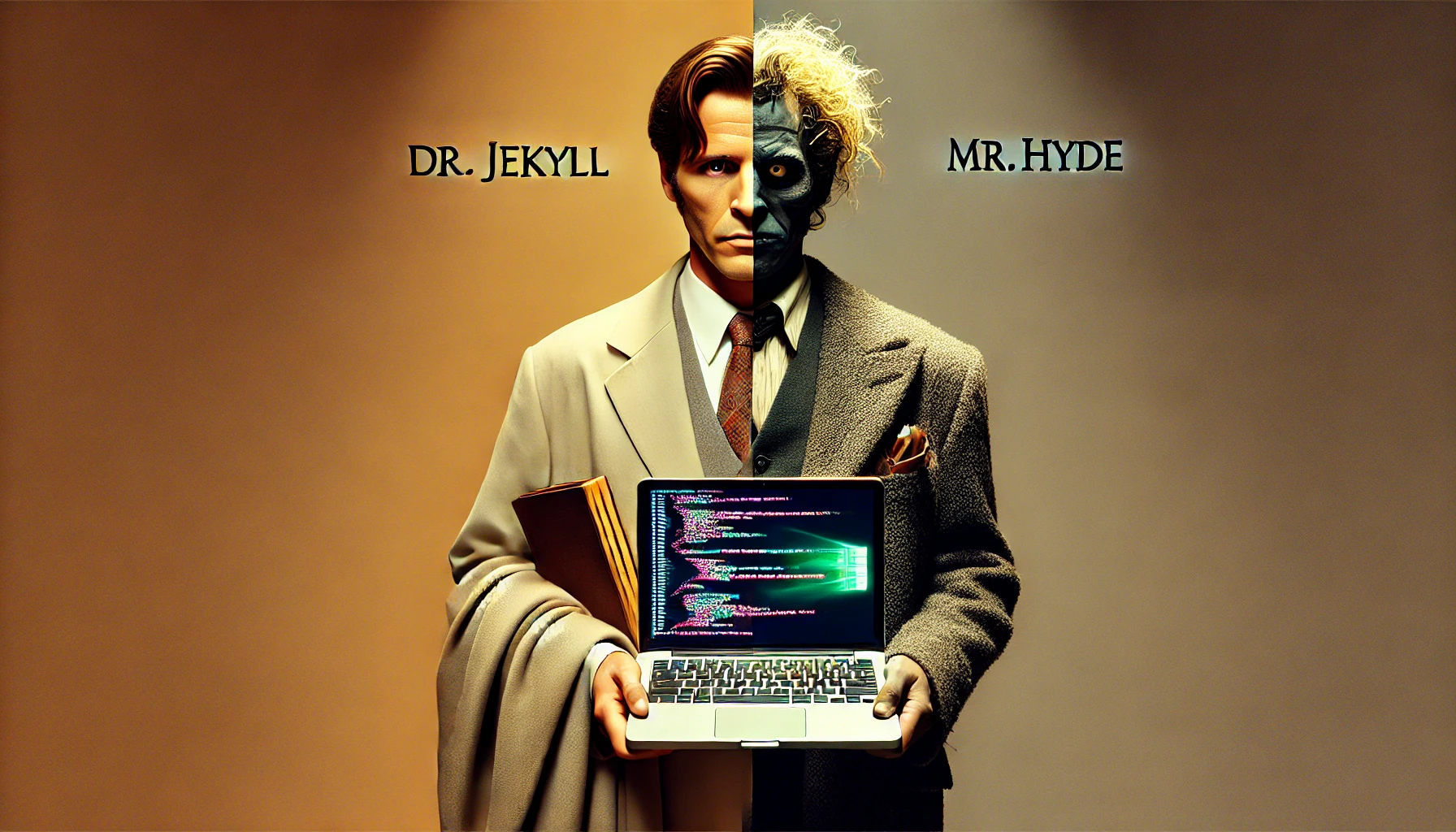

No, it’s not a new type of pasta or a trendy yoga pose. “Malla” stands for “Malicious LLM Application.” In simpler terms, it’s when the bad guys get their hands on AI language models and use them for nefarious purposes. Think of it as giving a supercomputer to Dr. Evil – nothing good can come of it.

A recent study by researchers at Indiana University Bloomington has pulled back the curtain on this underground AI economy, and boy, is it eye-opening. They looked at over 200 examples of these malicious AI services lurking in the dark corners of the internet between April and October 2023. It’s like finding out there’s a evil twin of ChatGPT that’s been raised by wolves – and now it’s loose on the internet.

The Rise of the Machines (The Naughty Ones)

Remember when we thought the worst thing AI could do was beat us at chess? Those were simpler times. Now, we’re dealing with AI that can craft phishing emails so convincing, they’d make a Nigerian prince blush with envy. These Mallas aren’t just party tricks; they’re fully-fledged criminal enterprises.

The researchers found two main flavors of Malla:

- The Rebel Without a Cause: These are uncensored LLMs, often based on open-source models. They’re like ChatGPT’s edgy cousin who never learned about ethics or legal boundaries.

- The Great Escaper: These are commercial LLMs that have been “jailbroken” using clever prompts. Imagine if Houdini was an AI and decided to use his escape artist skills for evil.

And here’s the kicker – these things are making bank. One Malla service, proudly named “WormGPT” (points for honesty, I guess), raked in a cool $28,000 in just two months. That’s more than most of us make from our side hustles selling handmade crafts on Etsy.

The Naughty List of AI Capabilities

So, what exactly can these bad-boy AIs do? Let’s break it down:

- Malicious Code Generation: About 93% of Mallas can write malware faster than you can say “antivirus.” It’s like having an evil genius coder on speed dial.

- Phishing Email Crafting: Around 41% are experts at writing emails that would make even the most suspicious grandma click on that link. Gone are the days of obvious spelling errors and promises of Nigerian fortunes.

- Scam Website Creation: About 17% can whip up fake websites faster than you can say “Is this really my bank’s login page?” It’s like having a con artist with a degree in web design.

But wait, there’s more! Some of these Mallas are disturbingly good at their jobs. Take DarkGPT and EscapeGPT, for instance. These digital ne’er-do-wells can produce malicious code that works about two-thirds of the time and – here’s the scary part – often flies under the radar of antivirus software. It’s like they’re wearing an invisibility cloak while picking your digital pocket.

And let’s not forget WolfGPT, the smooth criminal of the bunch. This AI is a phishing email maestro, crafting messages so slick they slide right past most spam detectors. It’s like having a silver-tongued con artist who never sleeps and can write a million emails a minute.

The Underground AI Economy: Where Bits Meet Black Markets

Now, you might be wondering, “How does one go about acquiring such a dubious digital assistant?” Well, much like other illicit goods, these Mallas are traded on underground forums and marketplaces. It’s like eBay, but instead of vintage action figures and slightly used lawn mowers, you’re browsing for AI that can help you commit cybercrimes.

The pricing models are fascinating (in a horrifying sort of way):

- WolfGPT: A steal at just $150 flat fee. It’s like buying a lifetime subscription to crime.

- DarkGPT: For the budget-conscious cybercriminal, it’s only 78 cents per 50 messages. Cheaper than texting!

- EscapeGPT: A subscription service at $64.98 a month. Because even evil AIs have recurring revenue models.

What’s particularly alarming is how these prices compare to traditional malware services. Mallas are often significantly cheaper, making the barrier to entry for cybercrime lower than ever. It’s like the fast food of digital malfeasance – cheap, quick, and terrible for society’s health.

The Good, the Bad, and the Binary

Now, before we all panic and throw our computers into the sea, let’s take a deep breath. Yes, this is concerning, but it’s not the AI apocalypse… yet.

First, the existence of these tools isn’t entirely surprising. As one of the study’s authors, Professor Xiaofeng Wang, points out, “It’s almost inevitable for cybercriminals to utilize AI. Every technology always comes with two sides.” He’s right – from the invention of the wheel (great for transportation, also great for getaway vehicles) to the internet itself, every major technological advancement has been adopted by those with less-than-noble intentions.

Moreover, this research is a crucial first step in combating these threats. By understanding how cybercriminals are using AI, we can develop better defenses. It’s like studying the villain’s playbook to build a better superhero.

Fighting Fire with Fire (or AI with AI)

So, what can be done about this digital den of iniquity? The researchers have some suggestions:

- Know Your Customer: Andrew Hundt, a computing innovation fellow at Carnegie Mellon University, suggests that AI companies should implement stricter “know-your-customer” policies. It’s like carding everyone at the AI bar, not just the ones who look underage.

- Better Legal Frameworks: We need laws that hold AI companies more accountable for how their creations are used. It’s like making sure the person who invented the lock isn’t also selling lockpicking tools on the side.

- Improved Detection Methods: By studying these Mallas, we can develop better ways to spot and stop them. It’s like training our digital immune system to recognize these new AI viruses.

- Aligning AI with Ethics: The paper suggests using techniques like reinforcement learning from human feedback to make AI models more resistant to being used for evil. It’s like giving AI a stronger moral compass.

- Continuous Monitoring: The researchers advocate for ongoing surveillance of these threats, constantly updating our defenses as new Malla techniques emerge. It’s a never-ending game of digital whack-a-mole.

The Human Element: Why We Can’t Just Blame the Bots

While it’s easy to point fingers at the AI, we can’t forget the human element in all of this. These tools, as sophisticated as they are, are still just that – tools. They’re being created, sold, and used by people.

This brings us to an important point: digital literacy and cybersecurity awareness are more crucial than ever. As these AI-powered threats become more sophisticated, our ability to recognize and resist them needs to level up too.

It’s not just about having a strong password anymore (though please, for the love of all that is holy, stop using “password123”). It’s about developing a healthy skepticism for unsolicited emails, being cautious about the websites we visit, and understanding that just because something looks or sounds legitimate doesn’t mean it is.

The Future: Cloudy with a Chance of AI Mayhem

So, what does the future hold? Will we be overrun by an army of silver-tongued AI scammers, or will we find a way to harness the power of AI for good?

The truth, as always, probably lies somewhere in the middle. As AI technology continues to advance, we can expect both its beneficial and malicious applications to become more sophisticated. It’s an arms race, with cybersecurity experts and cybercriminals constantly trying to outmaneuver each other.

But here’s the silver lining: studies like this one are shining a light on these threats before they become overwhelming. By understanding the problem, we’re better equipped to solve it. It’s like getting a peek at the enemy’s battle plans before the war even starts.

Conclusion: Don’t Panic, But Do Pay Attention

As we navigate this brave new world of AI, it’s important to strike a balance between caution and optimism. Yes, the existence of Mallas is concerning, but it’s also a testament to the incredible power and flexibility of AI technology. The same underlying principles that allow these malicious applications to exist also drive innovations in medicine, climate science, and countless other fields that benefit humanity.

The key is to stay informed, support responsible AI development, and maybe think twice before clicking on that email promising you’ve won a lottery you never entered. Remember, in the digital world, if something seems too good to be true, it probably is – especially if it’s trying to sell you a bridge or asking for your bank details.

As we move forward, let’s embrace the potential of AI while keeping a watchful eye on its darker applications. After all, the best way to fight bad AI is with good AI – and even better human judgment.

Stay safe out there in the wild, wild web, folks. And remember, the next time your computer asks “Are you human?”, make sure you’re really, really sure before you answer.