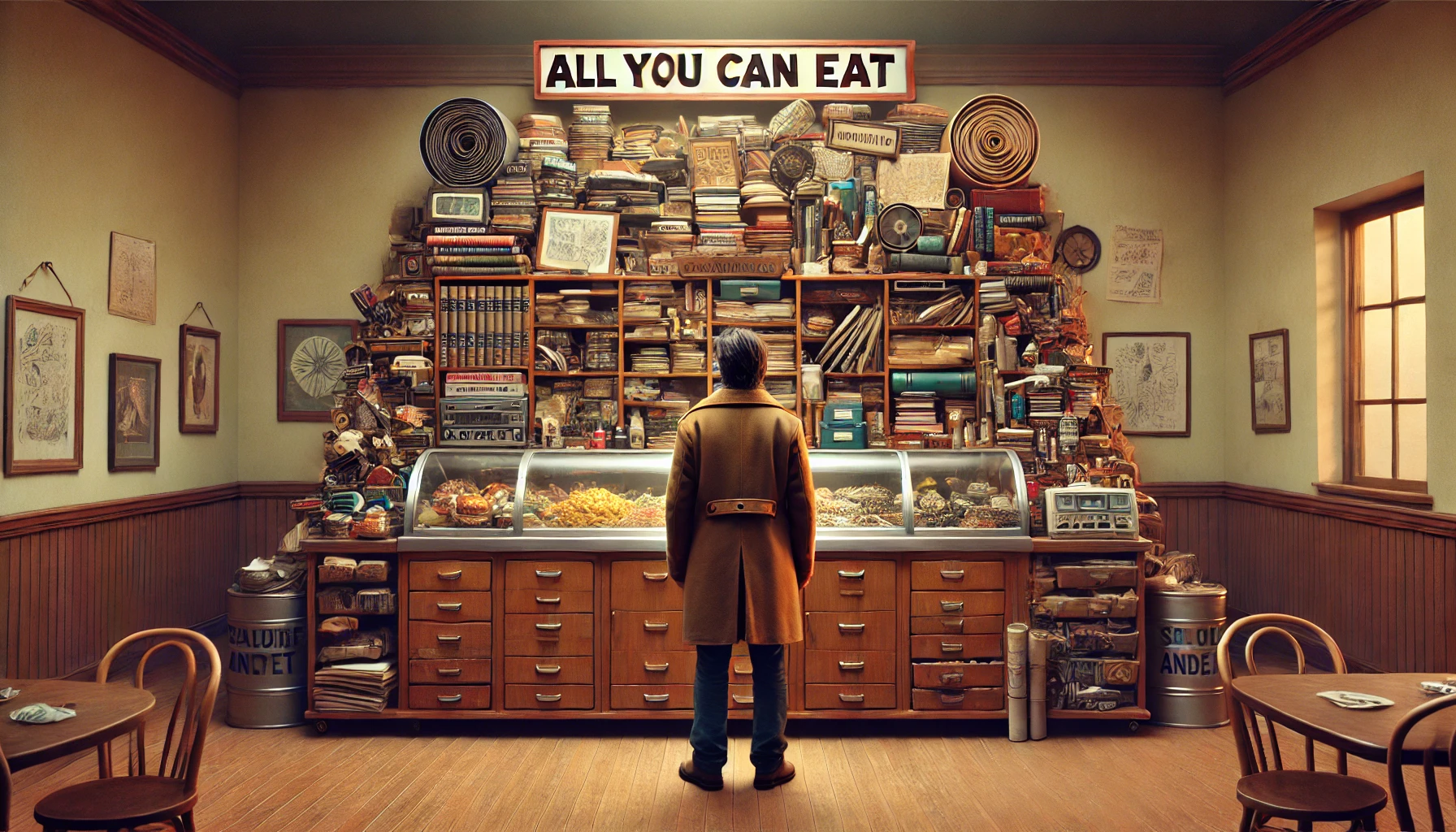

In 2005, Herb Sutter published a seminal article titled “The Free Lunch Is Over,” which marked a fundamental shift in software development. The article discussed how developers could no longer rely on CPU clock speed improvements to automatically make their programs run faster. Nearly two decades later, we’re witnessing a similar inflection point in artificial intelligence and large language models (LLMs). This time, the “free lunch” isn’t about CPU speeds – it’s about the assumption that we can indefinitely scale our way to better AI performance.

The Original Free Lunch

To understand the parallel, let’s first revisit Sutter’s original argument. For decades, software developers enjoyed a “free lunch” thanks to Moore’s Law and the consistent improvement in CPU clock speeds. Programs would naturally run faster on newer hardware without requiring any modifications to the code. This era came to an end when CPU manufacturers hit physical limits in clock speed scaling, primarily due to power consumption and heat dissipation issues.

The solution wasn’t to keep pushing clock speeds higher but to fundamentally change approach: moving to multi-core processors and parallel computing. This required developers to dramatically change how they wrote software, learning new programming paradigms and dealing with the complexities of concurrent programming.

Today’s AI Free Lunch

Fast forward to 2024, and we’re experiencing a different kind of free lunch in the AI revolution. Organizations worldwide are leveraging large language models and AI tools to boost productivity, automate tasks, and create new capabilities. The current approach often follows a simple formula: when we want better performance, we scale up the model – more parameters, more training data, more computing power.

This scaling-focused approach has given us impressive results, from GPT-3 to GPT-4, from BERT to PaLM. Each leap in model size has brought new capabilities and better performance. But just as with CPU clock speeds, we’re starting to see signs that this approach has its limits.

The Signs of Another Free Lunch Ending

1. Computational Demands and Costs

Training modern large language models requires enormous computational resources. GPT-3, with its 175 billion parameters, reportedly cost millions of dollars to train. The resource requirements for training these models are growing exponentially with each generation, following what some researchers call the “compute curve.”

This exponential growth in computing requirements presents several challenges:

- Training costs becoming prohibitively expensive for all but the largest organizations

- Increased environmental impact due to massive energy consumption

- Limited availability of specialized hardware (like high-end GPUs) needed for training

- Growing complexity in managing and orchestrating large-scale training operations

2. Diminishing Returns

Just as CPU manufacturers hit physical limits with clock speeds, AI researchers are encountering their own set of diminishing returns:

- Model size vs. performance improvements aren’t scaling linearly

- Larger models don’t necessarily lead to proportionally better results in specialized tasks

- Training efficiency decreases as models grow larger

- The gap between theory and practical application is widening

3. Energy and Environmental Concerns

The environmental impact of AI training and inference is becoming a crucial consideration:

- Training a single large language model can emit as much carbon as several cars over their entire lifetimes

- Data centers running AI models require significant cooling infrastructure

- The energy consumption of AI applications is becoming a major cost factor for organizations

4. Quality and Reliability Challenges

Bigger models don’t automatically solve fundamental AI challenges:

- Hallucinations and factual accuracy remain significant issues

- Bias and fairness problems can be amplified in larger models

- Model behavior becomes harder to predict and control

- Testing and validation become increasingly complex

The New Paradigm Shift

Just as developers had to embrace parallel programming in response to the end of CPU scaling, the AI field is beginning to shift toward new approaches:

1. Architectural Innovation

Rather than simply scaling up existing architectures, researchers are exploring:

- More efficient attention mechanisms

- Sparse model architectures

- Mixture of experts (MoE) approaches

- Novel training methodologies

- Smaller, more specialized models

2. Specialization and Fine-tuning

The future likely lies in:

- Domain-specific models optimized for particular tasks

- Efficient fine-tuning methods

- Better integration with traditional software systems

- Hybrid approaches combining different AI techniques

3. Focus on Efficiency

New research directions emphasize:

- Reduced training time and computational requirements

- Lower inference costs

- Better resource utilization

- Improved model compression techniques

4. Better Integration and Tooling

Success will require:

- More sophisticated development tools

- Better monitoring and observability

- Improved deployment and scaling infrastructure

- More robust testing and validation frameworks

What This Means for Organizations

Organizations need to prepare for this shift in several ways:

1. Strategic Planning

- Develop realistic expectations about AI capabilities and limitations

- Plan for increasing costs and complexity

- Consider the total cost of ownership, including training, deployment, and maintenance

- Invest in specialized expertise and tools

2. Technical Preparation

- Build expertise in model optimization and fine-tuning

- Develop infrastructure for efficient AI deployment

- Create robust testing and validation frameworks

- Implement monitoring and observability solutions

3. Skill Development

- Train developers in AI-specific tools and techniques

- Build expertise in prompt engineering and model optimization

- Develop capabilities in AI system integration

- Foster understanding of AI limitations and best practices

4. Risk Management

- Implement robust testing and validation procedures

- Develop strategies for managing AI-specific risks

- Create contingency plans for AI system failures

- Monitor and manage computational resources effectively

Looking to the Future

The end of the AI scaling free lunch doesn’t mean the end of AI progress. Just as the software industry adapted to multi-core processing, the AI field will adapt to these new challenges. We’re likely to see:

- More emphasis on efficient model architectures

- Better integration of AI with traditional software systems

- More sophisticated tools for AI development and deployment

- Increased focus on specialized, task-specific models

Conclusion

The parallels between Sutter’s “The Free Lunch Is Over” and the current state of AI development are striking. Just as software developers had to adapt to the end of automatic performance improvements from CPU scaling, AI practitioners must now adapt to the end of simple scaling-based improvements in AI performance.

This transition presents both challenges and opportunities. Organizations that recognize this shift early and adapt their strategies accordingly will be better positioned to succeed in the next phase of AI development. The future of AI lies not in blindly scaling up existing approaches, but in developing more sophisticated, efficient, and targeted solutions.

The free lunch may be over, but the kitchen is still open – we just need to learn how to cook more efficiently.