In the rapidly evolving field of artificial intelligence (AI), researchers and developers are constantly pushing the boundaries of what machines can do. The ultimate goal for many is the creation of Artificial General Intelligence (AGI) – a system capable of understanding, learning, and applying intelligence in a way comparable to human cognition across a wide range of tasks. However, in our eagerness to achieve this lofty aim, are we falling into a trap of superficial imitation rather than fostering genuine intelligence? This article explores the concept of the “AI Cargo Cult” – a phenomenon where the pursuit of AGI becomes mired in mimicry rather than true understanding and innovation.

Understanding the Cargo Cult Phenomenon

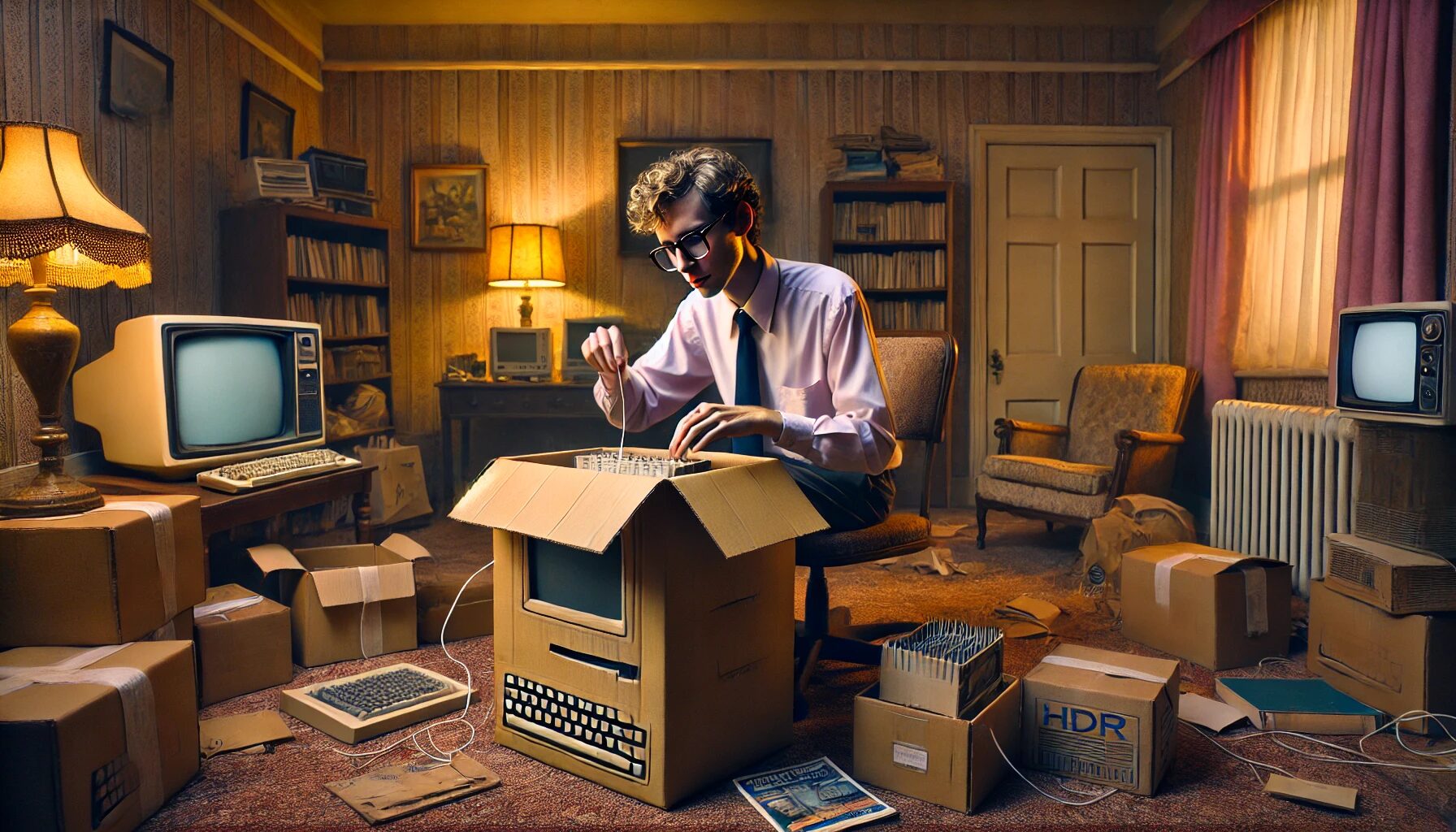

To fully grasp the implications of the AI Cargo Cult, we must first understand the origin and meaning of the term “cargo cult.” The phrase originates from practices observed in Melanesian cultures during and after World War II. These isolated communities witnessed the arrival of Western military forces, who brought with them advanced technology and abundant supplies (or “cargo”). After the war ended and the military departed, some islanders attempted to summon more cargo by building imitation airstrips, aircraft, and radio equipment out of local materials like wood and straw.

The cargo cultists had observed a correlation: the arrival of strange-looking people in uniform coincided with the appearance of valuable goods. In their attempt to recreate this perceived cause-and-effect relationship, they focused on mimicking the visible aspects of military presence without understanding the complex global economic and technological systems that actually produced and delivered the cargo.

The AI Cargo Cult: Mimicking Without Understanding

In the context of AI development, the cargo cult mentality manifests in several ways:

- Superficial Imitation of Human Intelligence: Many AI researchers and developers focus intensely on creating systems that can produce human-like outputs or behaviors. This approach often prioritizes passing tests designed to measure human-like performance (such as the Turing Test) over developing genuine problem-solving capabilities or understanding.

- Overemphasis on Benchmarks and Metrics: The AI community often places great importance on achieving high scores on standardized benchmarks. While these metrics can be useful for measuring progress, an overreliance on them can lead to systems that are optimized for specific tests rather than developing true, generalizable intelligence.

- Anthropocentric Bias: There’s a tendency to assume that human-like thinking is the only or best form of intelligence. This bias can limit exploration of alternative cognitive architectures that might be more efficient or effective for certain tasks.

- Neglecting Fundamental Principles: In the rush to achieve immediate, visible results, researchers might prioritize quick wins over developing a deeper understanding of the principles underlying intelligence and cognition.

- Misplaced Focus on Surface-Level Achievements: Celebrating AI systems that can engage in human-like conversations or create art that mimics human styles, without critically examining whether these systems truly understand or are merely engaging in sophisticated pattern matching.

The Consequences of Cargo Cult Thinking in AI

The AI Cargo Cult mentality can have several negative consequences for the field:

- Limited Innovation: By focusing too heavily on mimicking human intelligence, we may miss opportunities for AI to develop novel problem-solving approaches that could potentially surpass human capabilities in certain domains.

- Fragile Systems: AI systems that appear intelligent in narrow contexts but lack true understanding or adaptability. These systems may perform well on specific tasks or datasets but fail when confronted with slight variations or real-world complexity.

- Overlooked Potential: Failing to explore non-human-like forms of intelligence could mean overlooking AI architectures that are more efficient, effective, or suitable for certain tasks.

- Misaligned Goals: Pursuing human-like performance in areas where AI could potentially far surpass human capabilities may result in underutilizing AI’s potential.

- Ethical and Safety Concerns: Systems that mimic human-like outputs without true understanding could lead to unpredictable or unintended behaviors, especially in critical applications.

Case Studies: Cargo Cult Thinking in Action

To better understand how the AI Cargo Cult manifests in real-world AI development, let’s examine a few case studies:

1. Language Models and the Illusion of Understanding

Large language models like GPT-3 have made headlines for their ability to generate human-like text, engage in conversations, and even produce code. However, critics argue that these models, despite their impressive outputs, lack true understanding of the content they generate. They are essentially extremely sophisticated pattern-matching systems, trained on vast amounts of human-generated text.

The cargo cult mentality here manifests in the way some researchers and companies present these models as having human-like understanding or even consciousness, based solely on their ability to produce coherent text. This overlooks the fundamental differences between statistical pattern recognition and genuine comprehension.

2. Computer Vision and Human-Like Perception

Many computer vision systems are designed to mimic human visual perception, using architectures inspired by the human visual cortex. While this approach has led to significant advancements, it may also limit the potential for developing visual processing systems that surpass human capabilities.

For instance, humans are limited to perceiving a narrow band of the electromagnetic spectrum, while machines have no such inherent limitation. By focusing too heavily on recreating human-like vision, we might be missing opportunities to develop visual systems that can perceive and analyze a much wider range of information.

3. Chess and Go: Beyond Human Strategies

The development of AI systems for playing chess and Go provides an interesting counterpoint to the cargo cult mentality. Early attempts at creating chess-playing computers tried to mimic human strategic thinking. However, the most successful systems, like Deep Blue and AlphaGo, ultimately developed strategies that differed significantly from human approaches.

These systems demonstrated that moving beyond simple imitation of human cognition can lead to superior performance. They serve as a reminder of the potential for AI to develop novel problem-solving approaches when not constrained by human cognitive biases.

Moving Beyond the AI Cargo Cult

To truly advance the field of AI and make progress towards AGI, we need to move beyond cargo cult thinking. Here are some strategies for doing so:

- Embrace “Alien” Intelligence: Instead of always striving for human-like performance, we should encourage the development of AI systems that solve problems in ways that may be fundamentally different from human approaches. This could involve exploring new cognitive architectures, novel data representations, or unconventional problem-solving strategies.

- Focus on Fundamental Principles: Rather than getting caught up in surface-level imitation, AI research should prioritize understanding the fundamental principles of intelligence and problem-solving. This might involve deeper collaboration with fields like cognitive science, neuroscience, and philosophy to develop more robust theories of intelligence.

- Diversify Evaluation Metrics: We need to develop new ways to assess AI capabilities that don’t solely rely on comparison to human performance. This could involve creating more diverse and challenging test environments, or developing metrics that measure an AI system’s ability to generalize and adapt to new situations.

- Interdisciplinary Approach: Incorporating insights from fields like neuroscience, psychology, and philosophy can inform AI development. However, we should also be open to allowing AI to evolve beyond these human-centric foundations, potentially leading to new insights into the nature of intelligence itself.

- Balance Imitation and Innovation: While human intelligence can serve as a valuable starting point or inspiration, we should encourage AI to transcend these initial models. This might involve developing hybrid systems that combine human-inspired approaches with novel AI-generated strategies.

- Emphasize Explainability and Interpretability: As AI systems become more complex and potentially diverge from human-like reasoning, it becomes crucial to develop methods for understanding and interpreting their decision-making processes. This is essential not only for building trust in AI systems but also for gaining new insights into alternative forms of intelligence.

- Ethical Considerations: As we move beyond mimicking human intelligence, we must also grapple with the ethical implications of creating intelligence that may be fundamentally different from our own. This includes considering questions of control, alignment with human values, and the potential societal impacts of highly capable non-human intelligence.

The Future of AI: Beyond Imitation

As we continue to push the boundaries of AI, it’s crucial to recognize the limitations of the cargo cult mentality. While mimicking human intelligence has certainly led to impressive achievements, true progress towards AGI will likely require us to think beyond the constraints of human cognition.

Imagine AI systems that can perceive and manipulate data in dimensions beyond human comprehension, or that can develop entirely new problem-solving strategies unconstrained by human cognitive biases. These systems might approach challenges in ways we can barely conceive of, potentially leading to breakthroughs in fields ranging from scientific discovery to environmental protection.

However, this path is not without risks. As AI systems become more capable and potentially more alien in their cognition, ensuring their alignment with human values and maintaining meaningful human control become paramount concerns. We must navigate a careful balance between encouraging novel forms of machine intelligence and ensuring that these systems remain beneficial and comprehensible to humanity.

Conclusion: Charting a New Course

The pursuit of AGI is one of the most exciting and challenging endeavors of our time. By recognizing and moving beyond the AI Cargo Cult mentality, we open ourselves to a world of possibilities. Instead of simply recreating human intelligence in silicon, we have the opportunity to expand the very boundaries of what we consider intelligence to be.

This journey will require us to question our assumptions, embrace uncertainty, and remain open to ideas that may initially seem counterintuitive or even alien. It will demand collaboration across disciplines, rigorous scientific inquiry, and a willingness to venture into uncharted territories of cognition and computation.

As we move forward, let us approach the development of AI not as an exercise in imitation, but as an exploration of new frontiers in intelligence. By doing so, we may not only create more capable AI systems but also gain profound new insights into the nature of intelligence itself, potentially revolutionizing our understanding of cognition, consciousness, and our place in the universe.

The future of AI lies not in cargocult mimicry, but in genuine innovation and discovery. It’s time to move beyond the airstrips of imitation and chart a course for true artificial general intelligence.

If you’re intrigued by the discussion of imitation versus innovation in AI, you might be interested in exploring the concept of Artificial General Intelligence and how it aims to mimic human-like intelligence. The phenomenon of the Cargo Cult offers a fascinating lens through which to view the potential pitfalls in pursuing AGI. Additionally, the development of AI systems for games like Go and Chess sheds light on how innovations in AI can transcend human strategies and guide us toward new possibilities in machine intelligence. These topics not only illuminate current trends but encourage a deeper inquiry into the future of AI and its role in reshaping our understanding of intelligence itself.