In Tesla’s Q3 2025 earnings call on October 22, Elon Musk dropped a bombshell idea that’s flying under the radar amid talks of robotaxis and Optimus robots. Responding to a question about AI forms and xAI’s massive models, Musk mused about “bored” cars: “Actually, one of the things I thought, if we’ve got all these cars that maybe are bored, while they’re sort of, if they are bored, we could actually have a giant distributed inference fleet… if they’re not actively driving, let’s just have a giant distributed inference fleet.” He projected a future with 100 million Tesla vehicles, each equipped with about 1 kilowatt of high-performance AI inference capability, yielding 100 gigawatts of distributed compute—complete with built-in power, cooling, and conversion.

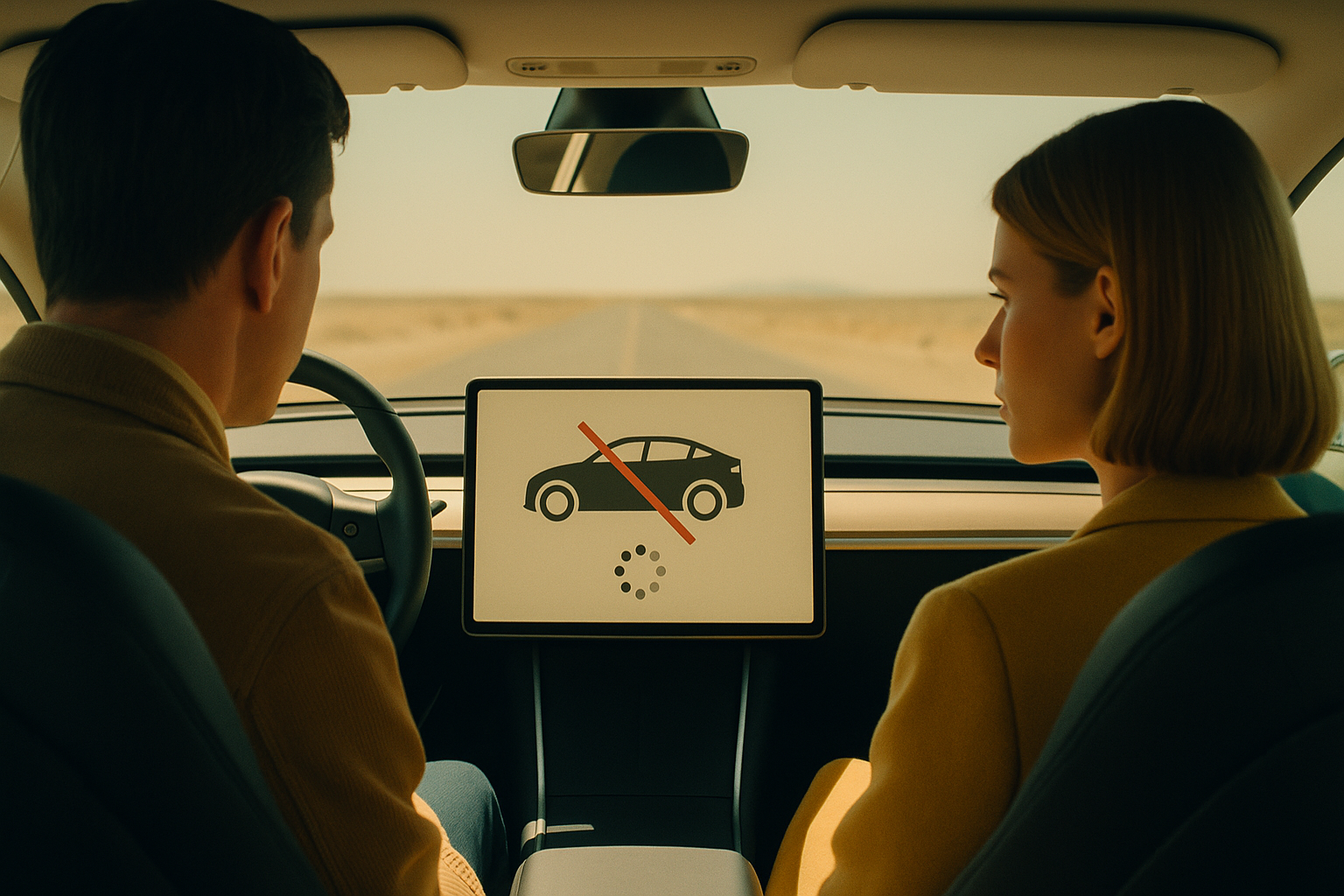

This isn’t Musk’s first rodeo with the concept; he floated similar thoughts in 2024 earnings calls, likening it to “AWS, but distributed inference.” But in 2025, with Tesla’s fleet growing and AI hardware advancing, it feels more tangible. The core insight: Tesla vehicles already pack sophisticated AI chips for Full Self-Driving (FSD). Why let them idle 90% of the time when they could form the world’s largest decentralized data center?

Understanding AI Inference and Why Distribution Matters

To grasp the genius, start with basics. AI involves two phases: training (building models on massive datasets, requiring exascale compute like Tesla’s Dojo supercomputer) and inference (running trained models to generate outputs, like ChatGPT responding to prompts). Inference demands less raw power but scales enormously with real-time users. Today, tech giants cram inference into hyperscale data centers, guzzling energy and costing billions in infrastructure.

Musk’s twist: distribute inference across Tesla’s fleet. Each car has an inference-optimized computer—current Hardware 4 delivers hundreds of TOPS (trillions of operations per second); the upcoming AI5 chip, built by Samsung and TSMC, promises more efficiency. Parked vehicles (most are unused 23 hours daily) could handle inference tasks via over-the-air updates. A central orchestrator assigns jobs, routes data through secure networks, and aggregates results.

Scale it: 100 million cars × 1 kW = 100 GW. For context, Nvidia’s largest clusters top out at megawatts; global data centers consume ~400 TWh annually. Tesla’s fleet could rival that without new builds—power from batteries (recharged by owners or solar), cooling from existing systems. Even in a robotaxi era, where cars might drive 50 hours weekly, 118 idle hours remain for compute.

The Tesla Advantage: Hardware, Network, and Ecosystem

Tesla isn’t starting from scratch. Every vehicle since 2019 has FSD hardware, evolving from HW3 (144 TOPS) to HW4 (over 300 TOPS) and soon AI5. These chips excel at vision-based inference, perfect for edge AI. Musk noted excess AI5 production could even feed data centers, but the fleet is the killer app.

Connectivity? Tesla’s Premium Connectivity and Starlink integration enable high-bandwidth links. Parked at homes or chargers, cars tap Wi-Fi or cellular. Security leverages Tesla’s encryption for FSD data uploads.

Ecosystem synergy: Inference could power xAI’s Grok (Musk contrasted car-sized models with Grok’s giants), optimize energy grids, or run third-party apps. Owners opt-in for credits toward charging, Supercharging, or FSD subscriptions—turning “bored” cars into earners.

Benefits: Efficiency, Revenue, and Global Impact

Economically, it’s a no-brainer. Traditional data centers cost $10-20 million per MW upfront, plus ongoing energy bills. Tesla’s fleet amortizes costs across vehicles already sold. At scale, it could generate billions in high-margin revenue selling inference to AI firms, developers, or even competitors.

Environmentally, distributed compute reduces transmission losses and leverages renewables (home solar charges cars). Geographically dispersed, it’s resilient to outages— no single point of failure like a Virginia data center flood.

For AI: Lower latency for regional tasks (e.g., European queries routed to EU cars). It democratizes compute, akin to SETI@home but profitable.

Musk reiterated confidence post-call: “I am increasingly confident that this idea could work,” quoting the original post. Community buzz echoes this, with discussions on latency mitigation via edge prioritization.

Challenges and Realistic Hurdles

Skeptics abound. Latency: Round-trip from user to car could add milliseconds—fine for batch jobs, tricky for real-time chat. Solutions? Prioritize plugged-in, high-bandwidth cars; hybrid central-distributed models.

Battery drain: 1 kW for hours eats range. Mitigate by restricting to charging sessions or adding buffer batteries. Owners need incentives to offset wear.

Reliability: Cars move, disconnect. Redundancy and task chunking (like blockchain) help. Regulations on data privacy, especially in Europe, demand ironclad compliance.

Not all inference fits—large models like Grok stay centralized. Start with Tesla-internal tasks: FSD simulations, Optimus training.

Experts note feasibility but complexity: “Technically possible, but rife with issues,” per 2024 analyses. DePIN projects like OGPU explore similar decentralized GPU networks.

Broader Implications and the Road Ahead

This elevates Tesla from EV maker to AI infrastructure titan. Paired with grid buffering (idle batteries stabilizing renewables), the fleet multitasks: transport, energy storage, compute.

Long-term: 100 GW inference accelerates AGI, powers robotaxis, even offsets owner costs. Musk envisions cars earning while parked, flipping ownership economics.

Tesla’s Q3 showed record revenues, but this idea hints at trillions in untapped value. As Musk said, it’s a “pretty significant asset.” In a compute-hungry world, the fleet isn’t just mobile—maybe it’s the future of cloud.